So you spend ages setting up a web hook so that you can receive notifications when something interesting happens at some API provider that you use. But they never seem to call it, and you wonder why. Have you ever considered how this might feel from the web hook’s perspective? Well, that’s what this song is about.

Yes, this is a very silly bit of anthropomorphisation, but whatever, it’s a fun idea and makes for a nice song! Style-wise, there’s a lot of Morcheeba and Zero 7 trip-hop influence in here, but I doubt they would sing quite such a happy-sounding chorus! For their sake, in keeping with the genre, I found some CR-78 drum machine loops, a lovely Wurlitzer electric piano (actually synthesised from scratch by Logic’s Sculpture instrument), a chilled-out wah-wah guitar, a wacky, chaotic texture from Native Instruments’ Komplete “25” library, some lovely Logic strings, and Logic’s drummer on a nice natural-sounding kit. I thought that I’d been using Logic’s players a bit too much in my songs lately, mainly because I’m lazy, so in this one I played the (real!) bass guitar part myself, also to prove to myself that I can.

Vocals are by Synthesizer V 2.0, as usual, using Solaria 2, with another Solaria and Mai on backing vocals.

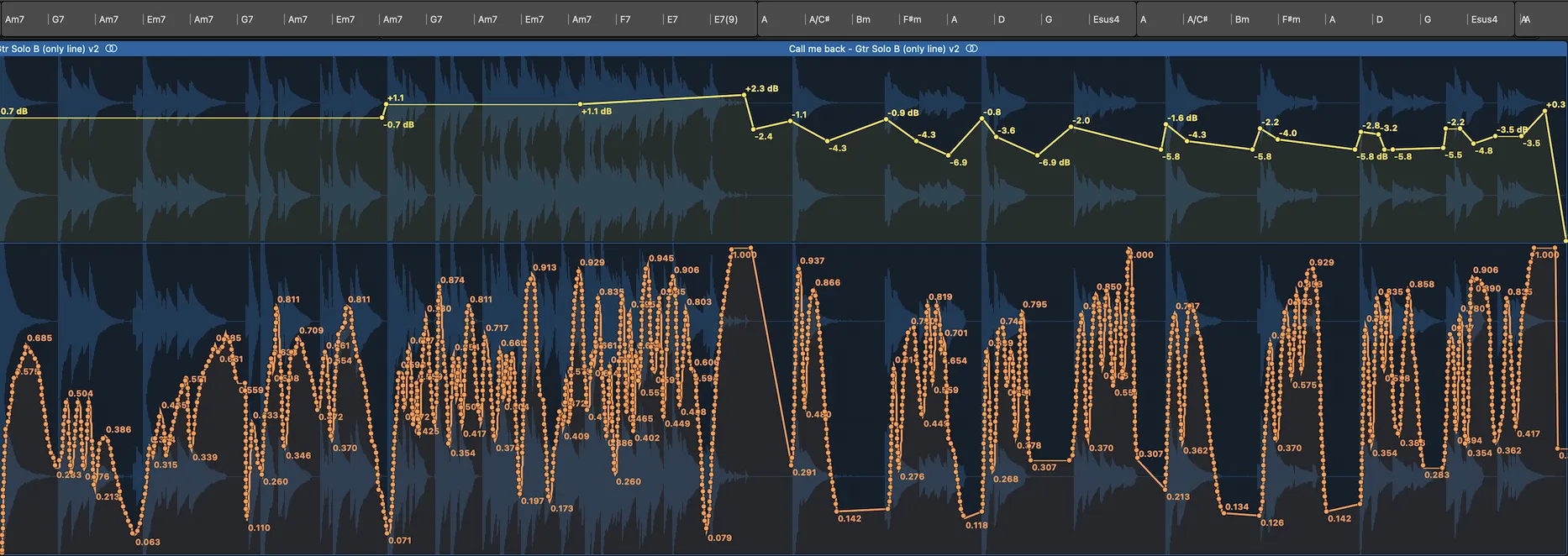

I commissioned Uama on fiverr.com, all the way over in Argentina, to play the guitar part, and he did a great job in creating the chilled, trippy style I was after, though I ended up redoing the wah effect with a ton of automation using the ribbon modulation controller on my Arturia Minilab II keyboard, some of which you can see in the featured image in this post.

As in some of my other songs, the lyrics are deliberately ambiguous – if you have no idea what a web hook is, you can be quite comfortable thinking that this song is about a slightly needy person that wants a call back after exchanging contact details, and is a bit unhappy about being ghosted. Those of you who understand what it’s really about might spot a few clues.

The first inkling comes from the “all those clicks, all those emails” that might be required in setting up a web hook. Then “I wanna say that it’s ok in two hundred ways” should cause you to recall that “200 OK” is an expected HTTP response code from a request sent to a web hook (yes it’s likely to be a 204, but 200 will do!). “Write a little message and post it to me”, well this is asking the caller to make an HTTP POST request, obviously! “I need a little token if you wanna know me better”, is not asking for a gift or bribe, but an authentication token, usually an HMAC signature, to prove that the caller is legit. “I want your body, not just what’s in your head”, refers of course (well, apart from the obvious) to the header and body parts of an HTTP request; the former will often contain the aforementioned token. Aside from all that it’s really just them complaining that the endpoint that was so carefully set up isn’t being used. That’s a bit sad, so we can feel a little sorry for the poor little web hook, but at least it sounds cheerful and optimistic, and resigned to continuing to wait for something to happen.

I have added this track to my “Developer Music” album.

[Verse]

When we first met

we got everything set up

all those clicks

all those emails

so I know you’ve got my number

but you never call me

did I give you the wrong impression

that I wanted to hear from you?

didn’t I make it clear?

that’s why I’m here

[Chorus]

I just want you to

call me back

to know that I’m wanted, so please

call me back

I’m just sitting here waiting for you to

call me back

Please let me know if I’m wasting my time

(call me back)

I’ll be here waiting for you

[Verse]

I wanna say that it’s ok in two hundred ways

Write a little message and post it to me

It might be a rejection; I’d prefer a love letter

but I need a little token if you wanna know me better

I want your body, not just what’s in your head

I’m listening out for every word that you said

I’m trying to keep this uncomplicated

I'm so lonely without you

[Break/solo]

[Chorus]

I just want you to

call me back

to know that I’m wanted, so please

call me back

I’m just sitting here waiting for you to

call me back

please let me know if I’m wasting my time

(call me back)

I’ll be here waiting for you

call me back

I wanna know that I’m wanted, so please

call me back

I’m just sitting here waiting for you to

call me back

Please let me know if I’m wasting my time

(call me back)

I’ll be here waiting for you

If you like this song, please consider supporting me by buying my albums on Bandcamp, and sharing links to my music on your socials.