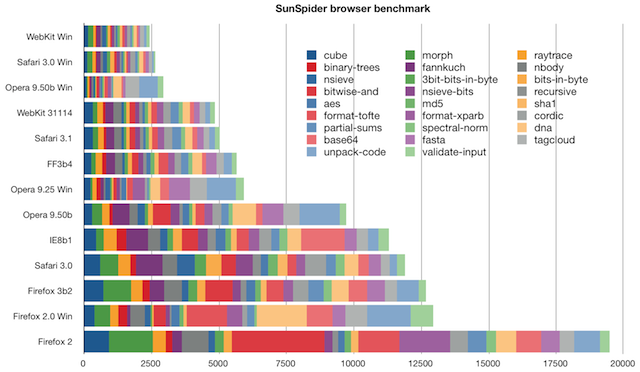

The WebKit guys have put together a new Javascript benchmark under the name “SunSpider“. It’s intended to go further than simple benchmarks like Celtic Kane’s and try to emulate real-world tasks. Safari/WebKit has been getting pretty quick on these benchmarks anyway, but this new one really shows its strengths. There are various comments about people’s results in the comments for that post, but no compilation for easy comparison, so I’ve put one together.

Updated: added Webkit Win and Opera 9.5b Win

Updated: Failed to run completely on Opera 9.5b Mac

Updated: Some stats for Opera 9.5b Mac and IE6

Updated March 18th: Added Safari 3.1, FF3b4, IE8

One big caveat with these results: they are all run on my MacBook 2GHz CoreDuo with 2Gb RAM running 10.5.1, and the Windows benchmarks are run under Windows XP in Parallels 3. Having said that, I’ve had similar results to those that others have posted for “real” windows installs, and they should at least be relatively significant. Also, the Windows benchmarks had quite high error percentages, so YMMV quite a lot.

Anyway, enough waffle, here’s a pretty graph:

What’s most impressive here is that Safari on Windows is seriously fast – over 20x faster than IE7! Weirdly, it’s also over twice as fast as Mac WebKit despite the fact that it’s running inside Parallels!

There’s no Mac Opera because 9.24 crashes on launch for me, and their 9.25 download page currently 404s.

I’ve attached the spreadsheet of results as a PDF in case anyone’s interested.

Updated: I added Webkit Win and Opera 9.5b win, and I retested IE7 to see if it could get any better, and it improved a bit – now it’s only 15x slower.

Updated: I had a go at running Mac Opera 9.5b, however, it fails to run all the benchmarks, or at least fails to report the timings correctly (the tests themselves appear to run ok), so doesn’t come up with a final score. The offending result is:

regexp: NaNms +/- NaN%

dna: NaNms +/- NaN%

Updated: I spotted the Opera 9.5b Mac benchmark values manually and dropped them in – results are not amazing. Also added IE6 on Win2k (also in Parallels) which got very good certainty values of ~1.5% but hysterically bad results overall!

Updated March 18th: Added Safari 3.1 and updated the Mac WebKit results for today. Added FF3b4 – a big improvement over 2. Added IE8 – a welcome showing for MS now they have seen the light. I removed IE 6 and 7 as their performance was so bad that it was making the graph hard to read!

I’m curious about two things: 1) The confidence intervals for each of the results, and 2) the result of the WebKit nightly build on Windows.

In all curiosity, since you’re trying betas of saf and ff, do you think you can try this out in an Opera 9.5 build too? The Opera Desktop Team blog should have some of those available for you.

Opera changed scripting engine from Linear_B in earlier Presto versions (“Core”, op7-9.25 that is) versions to Futhark in Kestrel (“Core2”, op9.50) and Peregrine (“Core2”, op10+), respectively), and Futhark is considerably faster than Linear_B for most things. On my system, op9.5 solidly beat saf3.0.4. Latest Webkit nightly crashes when loading, so I haven’t been able to test that one.

The confidence levels in Mac Browsers was much better – typically 1-5%, whereas Windows browsers were 15-30%, with an occasional 85%. This is probably an artefact of using Parallels.

I’ve just updated the benchmarks to include Opera 9.5a and WebKit Win.

Done.

I understand you ran the windows tests under Parallels. Are you sure the timing is reliable? If the script uses the VM’s clock to time the results it is potentially skewed. I’ve done timing on VMs and the results were often wrong because the VM’s clock is not running correctly.

I agree that the timing accuracy is probably quite a way out, however, I don’t think it’s unreasonable to assume that all browsers in the same environment are probably subject to roughly the same variation. Another factor is that when you run the benchmarks on IE vs Webkit, the difference is visually pretty obvious too. So, the stats I’ve done here are not in any way authoritative but they are at least roughly reproducible on my system, and seem to at lest vaguely correlate with the user experience in each browser. One conclusion is still inescapable – IE sucks, WebKit and Opera don’t :*)

Someone else is of course entirely free to post a similar set of benchmarks run on a real windows box – I just don’t happen to have one handy.

The WebKit developers recently released a benchmark on pure ECMAScript core performance, SunSpider 0.9. Jeff Atwood of Coding Horror fame has an analysis of how various browsers perform in The Great Browser JavaScript Showdown. Marcus Bointon has a sim…

Any chance you could add Opera 9.5 for mac to the graphs? Not sure what Opera 9.5a means, it’s in beta AFAIK. If you want the latest builds for mac/win/*nix this is the place: http://my.opera.com/desktopteam/blog/

My mistake – it is indeed a beta. I was carrying over the request that the opera 9.5 alpha be added and didn’t notice that it was actually a beta. I’m downloading The Mac beta now. BTW the main Opera download page is still broken for me.

As you may have seen from the post update, I didn’t succeed in running the opera 9.5 Mac Beta. I guess that’s why it’s still a beta…

I posted some Opera 9.5b results by watching the benchmark running manually and noting the missing timings.

A confidence interval over 5% is *huge*. We typically see figures in the range of 0.1-0.3% during our testing. It often requires ensuring that very few background processes are running, but it makes for much more reliable results. 85% variance means that some iterations were nearly twice as fast as others, which indicates something is wrong either in the application being tested or the environment in which the test is run.