Sorry to mislead you, but this page is not about pair programming. Well it is, but it’s mainly about a song about pair programming. Or is it a love song? Who can tell.

Pair programming is an approach where two developers sit side by side, sharing a keyboard and mouse, and work together on the same task/problem together, critiquing, explaining, understanding, and supporting each other’s work, taking it in turns to “drive”. It’s a good environment for mentoring, sharing knowledge, preventing “siloing”, onboarding new developers, getting to know your colleagues, and working on difficult bugs. Obviously there’s close personal interaction in such a situation, so I thought I’d explore the romantic possibilities in a song!

While this song is entitled “Pair Programming”, there isn’t any direct reference to the activity of that name. It might use some terminology familiar to those used to it, but I’ve deliberately used double meanings and ambiguity to leave things “uncommitted”. Hopefully it will still make sense to someone that has no idea what pair programming is, and takes the sentiments at face value! I’m not going to list them all, but hopefully some of the puns will elicit a few groans. My favourite line is “we could share a set of keys”, which could refer to the humble shared keyboard, but more intriguingly the prospect of moving in together. Similarly, does “making something beautiful, all it takes is you and me” refer to their co-written code, or forthcoming offspring? There is a minor break of the “4th wall” when our protagonists refer to “striking up a little nerd duet”.

The final line, repeated a few times, refers to “writing love letters to the people that we’re going to be”. This is a reference to a nice programming maxim – write clean, understandable code as a love letter to your future self, that you will thank yourself for when you revisit it to refactor or revise it, possibly many years later. Here though, they could easily be talking about each other.

I’ve said before that I often start out with how I want a song to feel, rather than any particular genre, instrumentation, or content; this one is no different. In terms of influences, this song owes a lot to “You + me”, by Public Service Broadcasting, from the lovely “Every Valley” album. It’s just a beautiful, simple duet, and from a production perspective I love the initial dry mix with that clean guitar that slowly builds into full orchestral backing. I don’t understand a word of Welsh though 🤷♂️.

Vocals are, again, courtesy of Synthesizer V, newly upgraded to version 2.0, using the Solaria and NOA Hex voice databases. It’s possibly the most complex set of vocals I’ve written, from both a lyrical and harmonic perspective. Building those harmonies was quite tricky. I also made much better use of the UJAM Amber2 virtual guitarist plugin I bought for “I’ve only met you once”, using more of its pattern library. I decided to go to town a bit for the orchestral backing, so in addition to Logic’s lush Studio Strings, we also have a wind ensemble (bassoon, clarinet, oboe, cor anglais, and flute), and some extra clarinets.

Bass and drums are, comme d’hab, using Logic’s built-in Bass and Drum kit libraries, played by Logic’s excellent players.

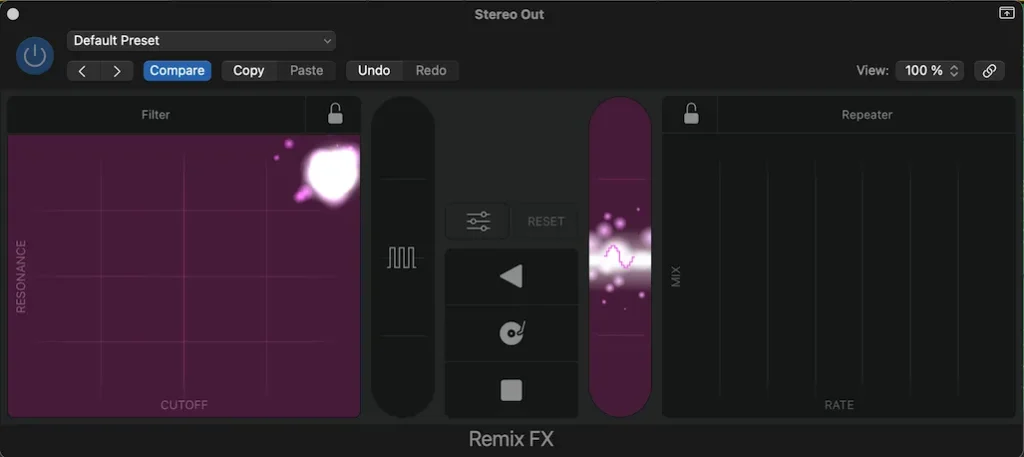

The overall mix is really quite simple (there are only 9 tracks), but I used a lot of automation, particularly on the reverb sends on the voices; dry voices have a certain raw appeal, but they really come to life with a little ambiance, and I wanted to capture that transition, which you can hear in the first verse.

To finish it off with a little more romance, I commissioned a sweet, Flamenco-ish nylon string guitar solo from Spanish guitarist Javi Sanchez on Fiverr.

Anyway, this duet is sung by a pair of developers who are clearly into each other, and I wish them all the luck in the world.

[M verse]

I love flying solo

I’ve made the whole world I can see

I might be going in the wrong direction,

but I’m enjoying being free.

I could use another point of view

to put my feet back on the ground.

Somebody to take my hand

guide me back in to land

[F verse]

I see the words fall from your lips

in ideas that flowed from fingertips.

You’ve got my attention now,

we just need to work out how

you’re finishing my sentences,

reading between the lines.

Together we’ll make something

beautiful.

[Chorus]

We’re writing love letters

to the people that we’re going to be.

I was hoping that perhaps one day

we could share a set of keys.

With you by my side we’d be a force

taking turns to take the lead.

We're building something beautiful

all it takes is you and me.

[Verse]

I’m staying up reading your every last letter

wondering if I could have said it any better.

Your echoes filling the gaps I’ve left,

striking up a little nerd duet.

I love the way you question

every choice I think I've made.

Not afraid to challenge, reconsider, rearrange.

When I’m sitting side by side with you

one and one is sometimes more than two.

[Chorus]

We’re writing love letters

to the people that we’re going to be.

I was hoping that perhaps one day

we could share a set of keys.

With you by my side we’d be a force

taking turns to take the lead.

We're building something beautiful

all it takes is you and me.

[Outro]

We’re writing love letters

to the people that we’re going to be.

If you like this song, please consider supporting me by buying my albums on Bandcamp, and sharing links to my music on your socials.