Wherever you go, you meet people fleetingly — on trains and planes, chairlifts, airports, theme park queues, conferences, up mountains, etc — and the vast majority of the time, you never see those people again. Yet so often these encounters are good experiences, and not continuing them makes you feel like you’ve lost something. Obviously you can’t be BFFs with everyone, there’s just not enough time in the day, but occasionally it’s really worth chasing these opportunities, and staying in touch with people that you’ve had a good time with. This might seem obvious, but I wanted to write a song about the feeling of being so overwhelmed by meeting so many people you can feel a bit lost, but at the same time the joy of meeting someone that you really like and putting in some effort to keep the ball rolling.

Now I’m not talking about dating, just finding people that you get on with — but who knows where such relationships might go, and the distant aroma of chemistry is always there.

So, onto the song. Pretty much all of my songs start from an idea about how I want it to feel — instrumentation, genre, etc — before I get into any specifics. Now, living in France means I get exposed to French music, which some find horrifying, but it’s not all bad! I was driving to go skiing one morning when I heard “Parce qu’on ne sait jamais” (“Because you never know“) by Christophe Maé, dating from way back in 2007, but it’s a timeless formula of happy, laid-back vocals and damped, strummy acoustic guitar, all pitched slightly high, and I thought I’d like to try writing something like that. Another song that fits this description is Bruno Mars’ “The Lazy Song“. I like Bruno’s relatively high voice, but this makes it a hard target for me to try to emulate! Overall, it’s an upbeat, happy song that’s unashamedly “pop”.

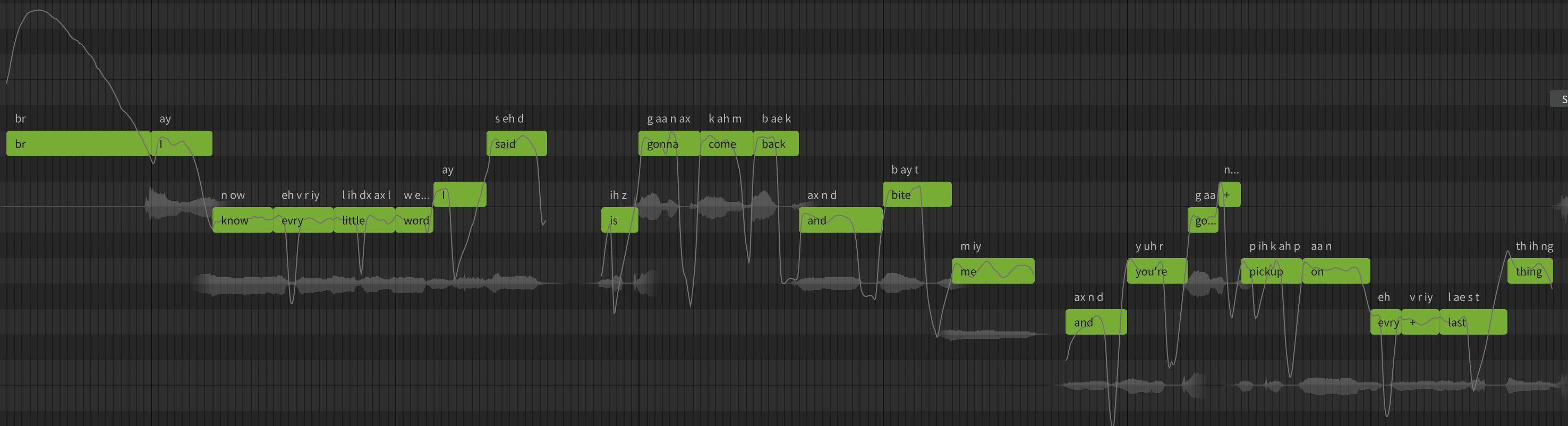

My songs so far have featured either me (with built-in limitations!) or my trusty voice synthesiser on vocals using a female voice called Solaria, and this has led to a certain uniformity, so I wanted to invest in a different voice for SV, and after listening to lots of demos, I bought NOA Hex by Audiologie which isn’t as high-pitched as would have liked, but it’s not bad. I don’t think it’s as good as Solaria, which is far smoother, more delicate, and much more believable; it sounds like it’s been recorded with the singer too close to the mic, and it’s difficult to stop it sounding overdriven. The breath intake sounds are excessively noisy (like someone about to sneeze), and some of the pronunciations are very odd; “you” often coming out as some weird “yuuuueh”, and things involving “L” sounds sounding like someone struggling with a tongue-twister. I found a tip on the SV forums suggesting lowering the “tension” parameter, and that does really help make it sound less aggressive, and automating that parameter works really well, easier than automating its many voice modes. Most importantly, it sings much better than I do, so I have used it on other songs like “Setting sail, coming home” too which I actually redid after this song, but released it before.

For the main guitar part I wanted to have fast, clean strumming like Christophe’s song, but found that while I could hit the chords, I couldn’t get it smooth enough, especially when combining with the right level of damping. Luckily I found a great deal on Amber2 by UJam, a virtual acoustic guitarist plugin that can hit these fast strums very cleanly.

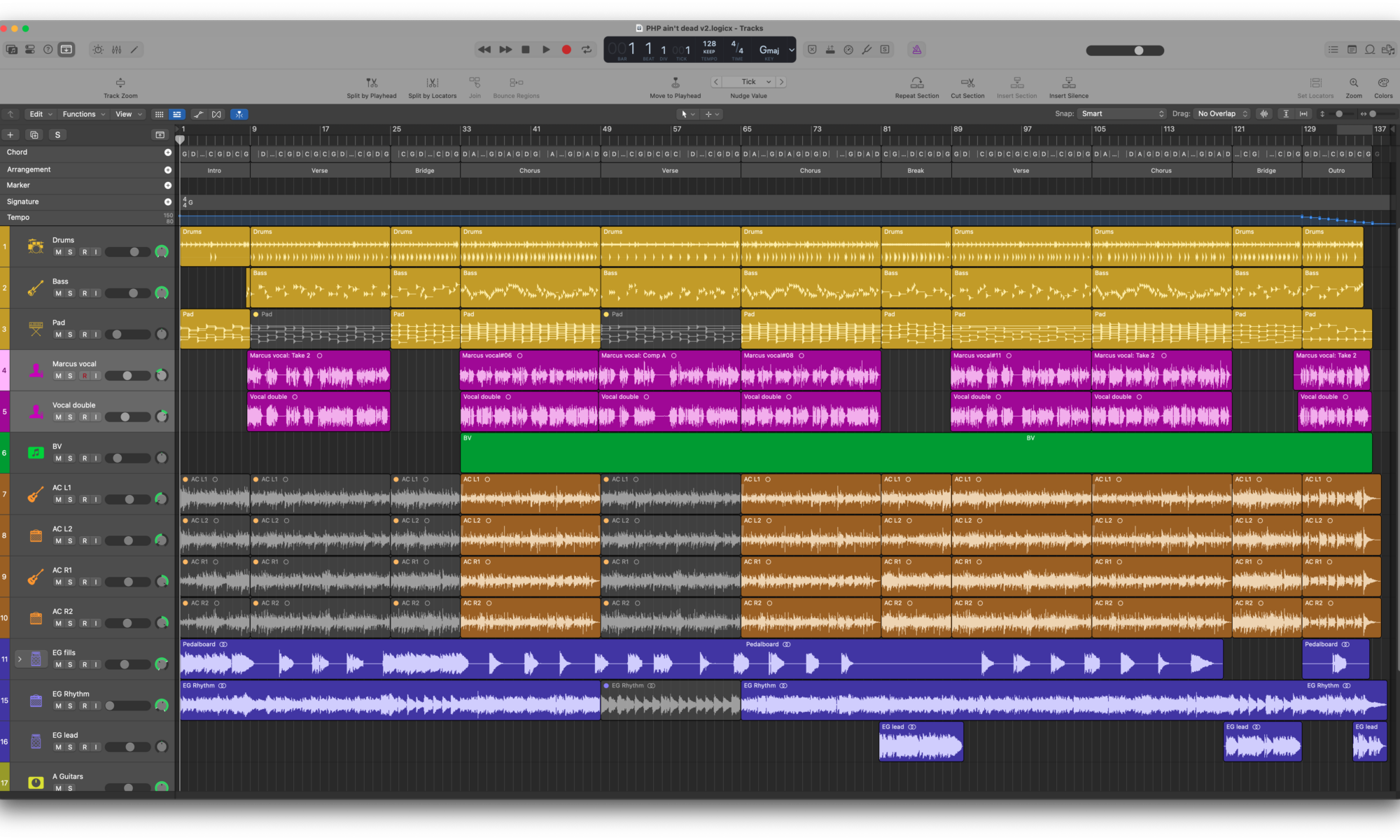

It took me a while to get to grips with this instrument, but it’s quite clever. You feed it the chords you want it to play (which can be generated by Logic’s keyboard player), shown in the lilac area on the right, and it plays the chord using various strumming and picking patterns selected by the keyswitch regions of the orange “phrase” keyboards on the left and centre. There are multiple sets of style phrases that can be switched into the central keyboard, which can be changed dynamically through Logic’s automation. Lastly, damping can be controlled dynamically via a MIDI modulation controller, though this is quite a coarse control – there are only about 3 damping levels. Amber2 really relies on using automations to make its output sound authentic, and it is quite impressively believable. It also has some built-in effects processing, control over mic vs pickup balance, and some overall voicing choices. Most of the song uses two instances of this plugin with similar, but not identical configs, panned left and right to give a nice big stereo sound. A third instance provides some quieter picked melodies. This plugin isn’t in any sense AI; it’s a huge sample library (7Gb) with some clever switching and layering abilities. UJam have milked this formula, and produce several similarly-styled plugins, such as Silk for nylon-string guitar and Sparkle for electric rhythm guitar.

Drums, bass, and a backing pad are provided by my go-to Logic players, which are really pretty good, using Logic’s built-in Bass instrument and RetroSyn for the pad, and Logic’s Studio Strings ensemble for additional pads, like at the end of the first verse.

I had a little trouble keeping the lyrics on topic; it’s just too easy to wander off in the direction of post-successful-first-date rather than staying in chatted-on-a-chairlift territory, but I figured a little romance can’t hurt. The verses are all about meeting the multitudes with a hint of trepidation, but the intro and chorus are celebrations of a single promising encounter, but hey, that is repeated, so there’s room for one more!

I wanted the second chorus to be a vocal monster, with a ton of harmonies sung by all those new friends, but with a hint of a duet lurking in there, and Synthesizer V delivers the goods, as usual.

The icing on the cake was a soulful harmonica solo and a few flourishes, played by Hector Ruano from far-off Venezuela via fiverr. I wasn’t quite aware of the limitations of a harmonica, but a typical harp supports a single key over just one octave. This obviously limits the range and melodic choices, but it makes up for it with a huge range of expression that Hector really pushed, with great results.

Having got together what I thought was my final mix, I listened to it in my car, and found the vocals too loud, and the guitar inaudible, so I fixed that.

I’ve ended up with a sweet, simple, happy song that I’m very pleased with. Hopefully the lovely people I’ve met that have acted as my muses will see themselves in it!

Rather than release the song myself, I chose to submit it to the “Bonks of Spring” BonkWave compilation, which meant that I had to sit on it for a few weeks…

After submitting, the person doing the mastering pinged me and suggested using “telephone” equalisation on the intro, and had done a rough version using mastering tools. I liked the idea, and it didn’t sound bad, but I thought it would work much better if done in the mix, so I did the EQ and added a vinyl emulation which added a few clicks and pops, some pitch drift and general grungy dustiness, but it really makes the full bandwidth instrumentation pop when it all kicks off.

If you’re not familiar with it, BonkWave is a non-genre genre, so all tracks are simultaneously both BonkWave and NotBonkWave, as an amusing poke at the ever-finer and increasingly less-distinguishable slices that constitute genres in modern music. In practice it tends to be associated with indie-minded, self-publishing musicians like myself, and has a bit of a slant towards various forms of electronica. If you’re wondering whether your track is BonkWave, be assured that it is. And isn’t.

There was a release party on the evening of June 13th for this album, and it was really nice to have been included. I felt this song was a bit out of place – it’s a very conventional pop song, lurking amongst swaths of swirly electronica, however, it was of course still #BonkWave, as well as #NotBonkWave, and someone kindly described it as a “palate cleanser”! Several in the party were a bit shocked to discover that the vocals were all synthetic. Maybe I’ll try something more “swirthy” next time…

Since I’ve published a few tracks that are distinctly not about developer stuff and didn’t really fit into my “Developer Music” album, I thought I’d start gathering my tracks in a new album I’ve called “People Music“. The only other change I made was to move my track “Uncomfortable” to the new album, as it fits better there.

[Intro/verse]

I had a feeling when I first saw you

that you were someone that I needed

to fill a gap in me that I didn’t know was there.

If you’d told me this would happen,

I’d never have believed you

[Verse]

First impressions count and I’m running out of numbers.

Feeling anxious when making new friends.

I’m not too good at this, the pressure that I’m under

never knowing quite how it will end.

So many names, so many faces,

meeting up in so, so many places.

It’s hard to tell who you’ll ever see again

but every now and then you find someone special.

[Chorus]

I’ve only met you once but that was all I needed

to have my expectations all exceeded.

I’ve only met you once; you’re what I’m looking for:

a new friend for life, or maybe something more.

I’ve only met you once, you might not feel the same,

but from that first time we met, I just wanted to see you again.

I’ve only met you once, but I’d like to make it twice.

To feel this way is crazy when I’ve only met you once.

[Verse]

Every little encounter could be the start of something new,

but it’s so full of potential that I just don’t know what to do.

The world’s so full of people, and only so much time.

I can’t be friends with everyone, but you and me get along fine.

Smiling faces, shaking hands, kissing hello and goodbye.

It’s not the time for feeling shy, sometimes you just gotta try.

Make new friends wherever you go, and you’ll never be on your own.

A face in the crowd sticks in my mind; you’re the one that I wanted to know.

[Chorus]

I’ve only met you once but that was all I needed

to have my expectations all exceeded.

I’ve only met you once; you’re what I’m looking for:

a new friend for life, or maybe something more.

I’ve only met you once, you might not feel the same,

but from that first time we met, I just wanted to see you again.

I’ve only met you once, but I’d like to make it twice.

To feel this way is crazy when I’ve only met you once.

If you like this song, please consider supporting me by buying my albums on Bandcamp, and sharing links to my song posts on your socials.