This is not a happy song. I had the initial ideas for it in about 2010, writing the lyrics of the first verse and chorus, and a vague idea of how I wanted the synths to sound in the chorus. I had a few attempts at singing and recording it, but nothing really came out how I wanted it, so I sat on it for 15 years. It’s really the complete antithesis of my recent song Pair Programming, presenting the perspective of someone stuck in a long-term relationship that seems to be slowly fading into indifference, making them feel lost and unwanted. I told you it wasn’t happy!

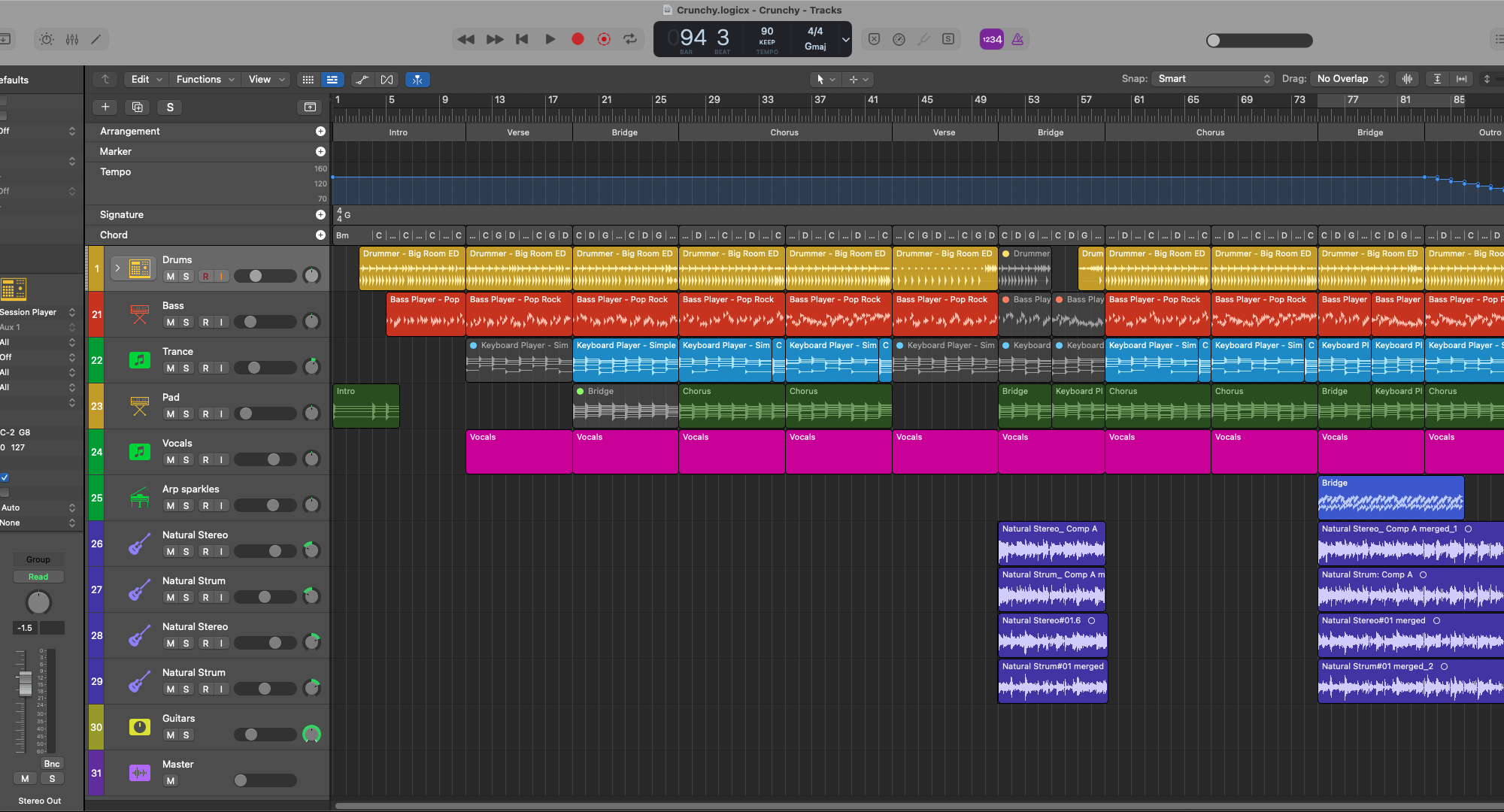

After discovering the amazing abilities of synthetic vocals a couple of years ago, I set about resurrecting and completing this song. I had always wanted it to have a Depeche Mode “Shake the disease” vibe, with perhaps a bit of Front 242 aggression. I recently got UAD’s PolyMAX synth plugin in a free offer, and it’s a really great synth, much simpler than impOSCar3 that I used in Dancing By Myself, but still sounds fantastic, particularly its unison and per-voice stereo panning features. It provides the primary “Zeow” in the chorus, the jingly verse chords, a pad in the second chorus, and the “jets” noise bursts. I used Logic’s ES2 for the sharp, metallic melody in the verse, Alchemy for the stabs and the awesomely violent bass in the chorus. The bass in the verse is a venerable Korg Wavestation, one of my all-time favourite synths.

Two instances of Logic’s drummer players provided drums using Logic’s Drum Machine Designer instrument and the “Heavy Industry” kit.

It’s harmonically curious. There are only really two chords, B♭ major and B minor, with a little Asus2 at one point; they are all very close together, and not really in any particular key.

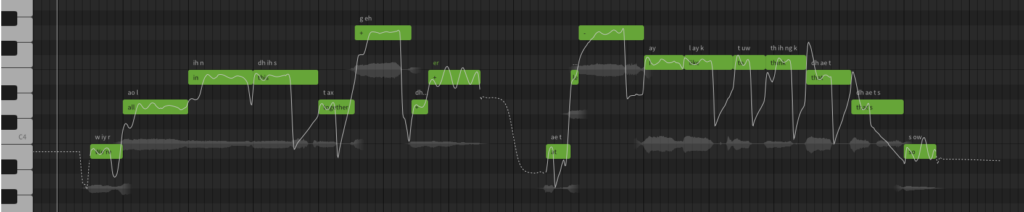

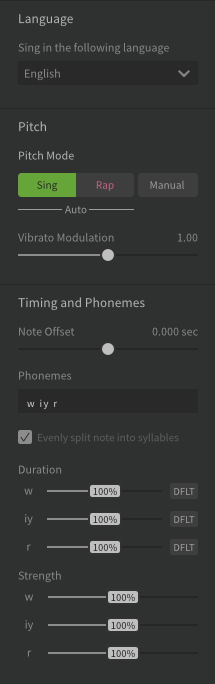

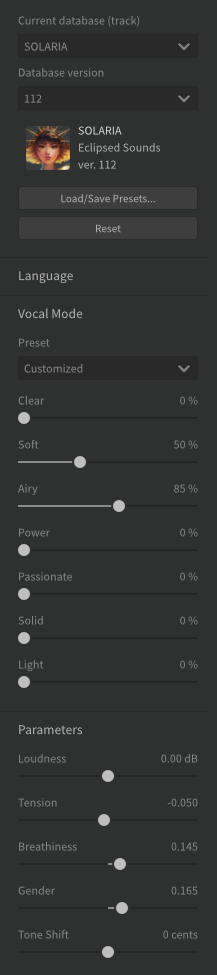

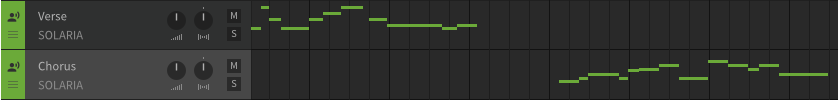

The vocals are as usual provided by Synthesizer V. The lead is Noa HEX, and the backing vocals are Solaria II. SV has improved a lot in version 2, but I ended up not really using any automation of voice parameters; per-group voice settings were enough, as the style is pretty consistent all the way through the song. Solaria still sounds great; I particularly like the slow melody on the backing vocals in the second verse, and the somewhat discordant and unexpected harmonies in the second chorus.

The a capella section in the middle provides a dramatic contrast in its isolated vocals and hopeful message. This was quite fun to construct, but it was quite hard to stop the voices sounding a bit artificial, especially the lower one.

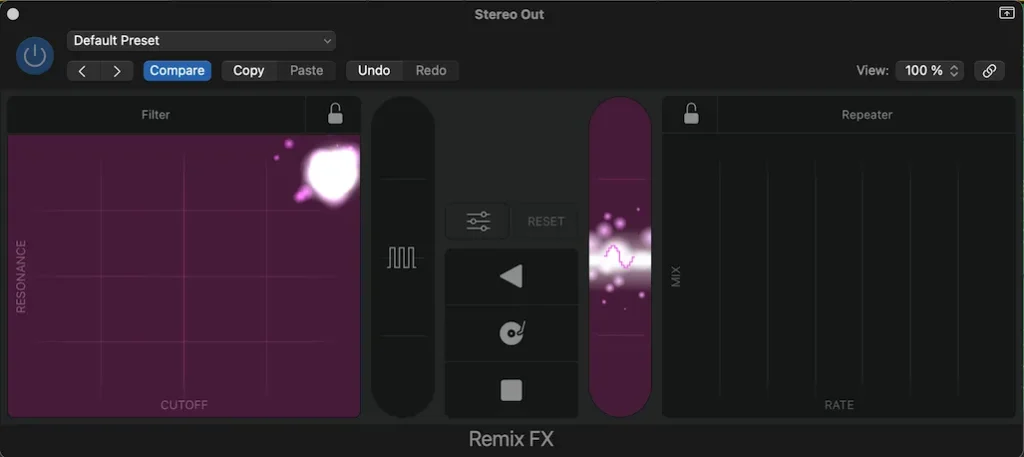

I used Logic’s lovely sounding Quantec Room Simulator for the main reverb, lots of bitcrushing and distortion on the “zeow” and chorus bass, sonible smart:EQ4 to handle masking and balance, and Logic’s mastering module placed after an instance of the famous SSL bus compressor.

All in all, I wanted aggression, discordance, and discomfort from this very depressing song, and I think it delivers them quite effectively.

[Verse]

We’ve been together forever, never apart.

But it seems like you’re a long way from my heart.

And though I’m beside you, it’s as if I’m not there.

Wanna feel your arms around me, your hands in my hair.

We’ve made so many memories, right from the start.

Yet now it feels like we’re worlds apart.

Though we walk side by side, I sense a divide.

Need the warmth of your love, you back on my side

[Chorus]

Why aren’t you close when you are near?

Where are the words that I’m longing to hear?

How can I reach you when it’s you I can’t touch?

Is a sign of affection just asking too much?

How can you hide when you’re standing so near?

Is this isolation the thing that I fear?

How can I reach you when it’s you I can’t touch?

Is a sign of affection just asking too much?

[A capella break]

Even as our love descends

I want our fairy tale to have a happy end

Even as our love descends

I want our fairy tale to have a happy end

Even as our love descends

I want our fairy tale to have a happy end

Even as our love descends

I want our fairy tale to have a happy ending

[Verse]

You’re lying beside me when we’re going to sleep

but I wake and you’re gone, leaving cold, empty sheets.

No kisses or hugs, no touch of a hand;

None of this is going the way that I planned.

We used to share dreams, our futures combined

now silence fills the air, and I wonder why.

The laughter we had, the love we once knew,

is fading away and I don’t know what to do.

[Chorus]

So why aren’t you close when you are near?

Where are the words that I’m longing to hear?

How can I reach you when it’s you I can’t touch?

Is a sign of affection just asking too much?

How can you hide when you’re standing so near?

Is this isolation the thing that I fear?

How can I reach you when it’s you I can’t touch?

Is a sign of affection just asking too much?

If you like this song, please consider supporting me by buying my albums on Bandcamp, and sharing links to my music on your socials.