Several years ago I played Bastion by Supergiant Games. I’m usually a first-person-shooter gamer, but this was a very enjoyable alternative. Some particularly notable things about this game are the narration, and the fantastic soundtrack by Darren Korb.

So much did I enjoy it, that I thought I’d try making cover versions of some of the tracks. Before I started releasing tracks of my own, I did this a lot, and it really helped me learn how to build tracks, how to listen to the subtleties of the originals and break things out, and then build up a mix. I find making covers frees you from the need to write melodies, chords, and lyrics, letting you concentrate on just playing stuff and producing. I’ve never released any of my cover versions before; some of them are quite bad, but I’m pretty happy with this one!

So in July 2021 I recorded versions of “Build That Wall”, “Mother, I’m here” and the closely related medley, “Setting sail, coming home”, presented here. I’m not releasing the other two, at least not in their current state!

I tracked down the sheet music for this song, along with some YouTube videos of others playing it. The first thing I noticed is that the acoustic guitar tuning is very unusual, dropping the lowest strings really low, meaning that none of the chord patterns I was familiar with were much use!

First I played the guitar parts, and these low tunings on fresh strings sounded so great, I really enjoyed making a big, deep, stereo image with them. It would help to play this on a big dreadnought-style guitar, as on my relatively small-bodied Crafter acoustic some of the notes ended up a bit thin and rattly, but that’s ok within the somewhat rough style of the tracks, and some EQ helped.

I had real trouble singing this song as it was quite outside my range, both high and low, and sounded very strained. I got my elder daughter to sing the female part (originally sung by Ashley Barrett), and that worked ok; she sings very nicely, but seems to find it more difficult when it’s being recorded and the pressure is on! So I concentrated on the production side, fixed what I could, and then sat on the tracks for a few years.

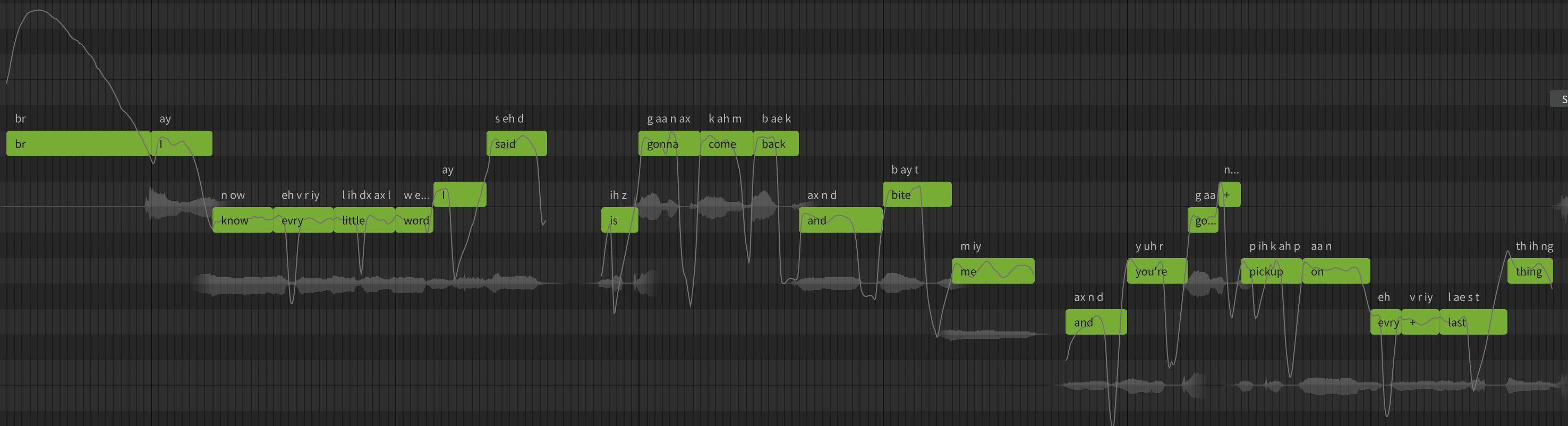

Fast forward to 2025, and I discovered the wonders of vocal synthesisers, and got a male voice database that would work much better for the main vocal line of this song, so I thought I’d revisit the track with a view to releasing it. I also tried converting my daughter’s recording to a synthesised track (a new feature in SV 2.0), and that worked well, but I eventually used it to double-track her line rather than replace it, since the female vocal in this song is more background than lead and the pairing makes for a nice stereo field, leaving the male vocal in the middle.

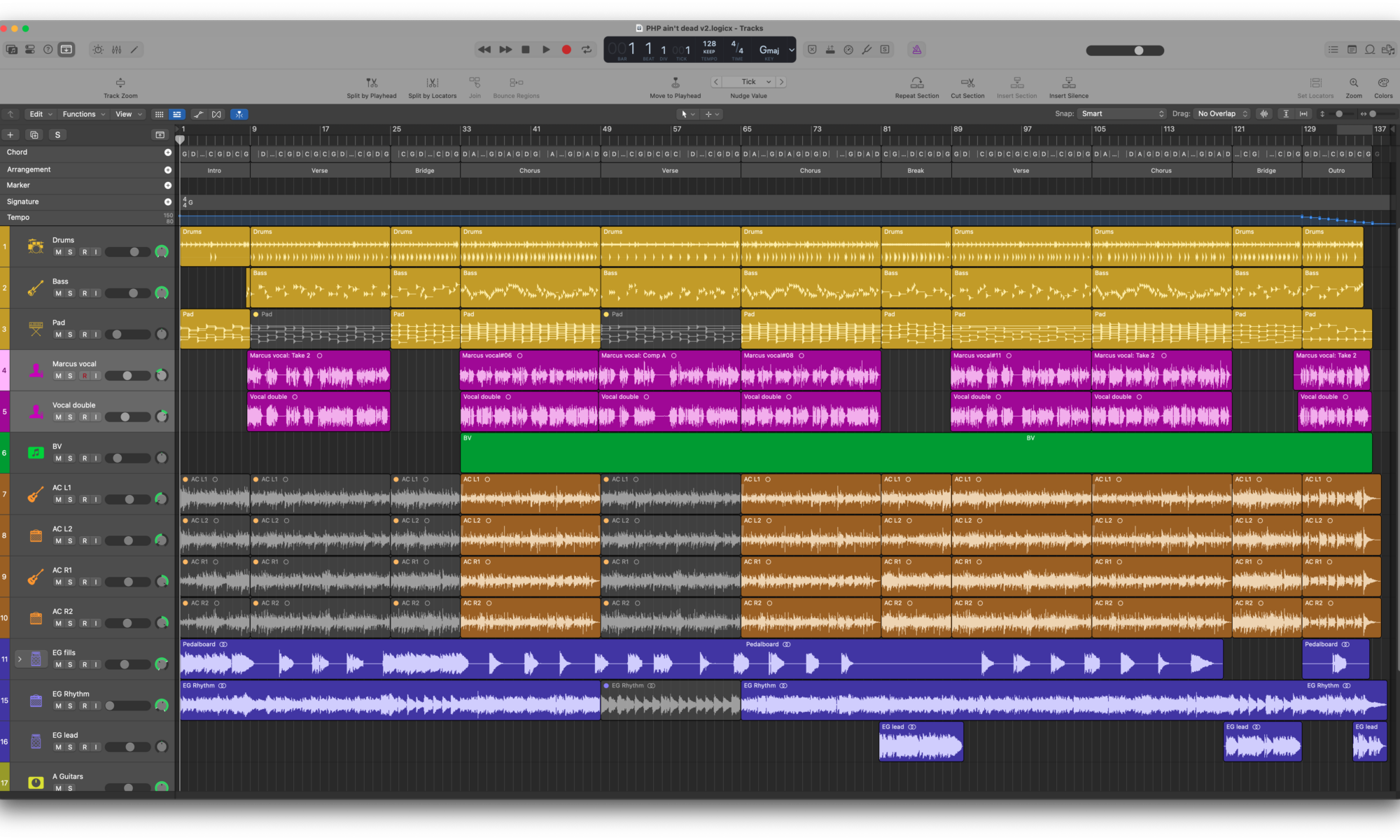

The middle instrumental section uses a nice zither sampled instrument, coupled with some slidy acoustic guitar notes and lots of delay. Gotta love those reversed cymbals too!

The bass is played on a keyboard, with a sub-bass oscillator making it very deep. The drums are played on keyboard using a grungy sampled electronic kit stuffed through a distortion pedal and compressed to the extreme. I also skipped the massive 2.6kHz band-pass EQ on the original drums in favour of a fuller-bodied sound. The wah-wah synth pad is from Alchemy, and the general string pad is a Logic sampler instrument.

Listening back to the original, my version sounds slicker and more expansive, but lacks some of the simplistic appeal of the original. That’s more about my production preferences (I’m a big fan of 1980s Trevor Horn and Steve Lipson’s work for Grace Jones and Frankie Goes To Hollywood), and my lesser capabilities as a musician! Anyway, I enjoyed making it.

IIf you like this song, please consider supporting me by buying my albums on Bandcamp, and sharing links to my music on your socials.